Bag of Words

DBoW Library

The library is composed of two main classes: Vocabulary and Database. The former is trained offline with numerous images, whereas the latter can be established/expanded online. Both structures can be saved in binary or text format.

Weighting

Words in the vocabulary and in bag-of-words vectors are weighted. There are four weighting measures implemented to set a word weight wi:

DBow calculates N and Ni according to the number of images provided when the vocabulary is created. These values are not changed and are independent of how many entries a Database object contains.

Scoring

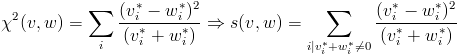

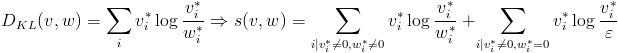

A score is calculated when two vectors are compared by means of a Vocabulary or when a Database is queried. These are the metrics implemented to calculate the score s between two vectors v and w (from now on, v* and w* denote vectors normalized with the L1-norm):

The default configuration when creating a vocabulary is tf-idf, L1-norm.

References

Last updated